Getting started with Data Science on Kubernetes - Jupyter and Zeppelin

It's no secret that the data analytics community has been moving towards using more open-source and cloud-based tools. Apache Zeppelin and Jupyter notebooks are two of the most popular tools used by data scientists today. In this blog post, we will show you how to easily integrate Ilum with these notebooks so that you can run your data analytics workloads on k8s.

Kubernetes for Data Science

With the rise of big data and data-intensive applications, managing and deploying data science workloads has become increasingly complex. This is where Kubernetes comes in, as it provides a scalable and flexible platform for running interactive computing platforms such as Jupyter and Zeppelin.

The data science community has been using Jupyter notebooks for a long time now. But what about running data science on Kubernetes? Can we use Jupyter notebooks on k8s? The answer is yes! This blog post will show you how to run Data Science on Kubernetes with Jupyter notebooks and Zeppelin.

We will use Apache Spark and Ilum interactive sessions to boost computation. The interactive sessions feature allows you to submit Spark code and see the results in real time. This is a great way to explore data and try out different algorithms. With Apache Spark, you can easily process large amounts of data, and the interactive sessions feature of Ilum makes it easy to try out different code snippets and see the results immediately.

Ilum, Apache Livy and Sparkmagic

Ilum was created to make it easy for data scientists and engineers to run Spark on Kubernetes. We believe that by simplifying that process, we can make it easier for users to get started with these technologies and increase the adoption of k8s within the Data Science community.

Ilum implements the Livy rest interface, so it can be used as a replacement for Apache Livy in any environment. We'll show how to integrate Ilum with Sparkmagic.

Both Ilum and Livy can launch long-running spark contexts that can be used for multiple spark jobs, by multiple clients. However, there are some key differences between the two.

Ilum is well-maintained and actively developed software, updated with new libraries and features. Livy, on the other hand, has a robust community that has created integrations with many applications. Unfortunately, the development of Livy has stalled and because of that Livy is not ready for Cloud Native transformation.

Ilum can easily scale up and down and is highly available. A big advantage that Ilum has over Livy is that it works on Kubernetes. It also allows integration with YARN.

Both tools provide an easy-to-use web interface for monitoring spark clusters and spark applications, but Livy's seems to be outdated and very limited.

So, why shouldn’t we take full advantage of Ilum and Livy?

Ilum-livy-proxy

Ilum has an embedded component that implements Livy API. It allows users to take advantage of Livy’s REST interface and Ilum engine simultaneously.

We are working hard to add Python support to Ilum, in addition to the existing Scala support. Keep an eye on our roadmap to stay up to date with our progress.

Zeppelin and Jupyter

Zeppelin and Jupyter are web-based notebooks that enable interactive data analytics and collaborative document creation with different languages.

Jupyter notebooks are especially popular among Python users. The project has evolved from the IPython environment but now boasts support for many languages.

The list of Jupyter's kernels is huge.

Zeppelin is well integrated with big data tools. In Zeppelin, it is possible to combine different interpreters in one notebook and then run them in different paragraphs.

You can see a list of interpreters supported by Zeppelin here

Integrating notebooks with Ilum

Setup Ilum

Let’s start by launching the Apache Spark cluster on Kubernetes. With Ilum, it is pretty easy to do this. We will use minikube for the purpose of this article. First thing we have to do is to run a Kubernetes cluster:

minikube start --cpus 4 --memory 12288 --addons metrics-serverOnce minikube is running, we can move on to the installation of Ilum. First, let's add a helm chart repository:

helm repo add ilum https://charts.ilum.cloudIlum includes both Zeppelin and Jupyter, but they must be enabled manually in the installation settings together with ilum-livy-proxy.

helm install ilum ilum/ilum --set ilum-zeppelin.enabled=true --set ilum-jupyter.enabled=true --set ilum-livy-proxy.enabled=trueIt can take some time to initialize all pods. You can check the status with the command:

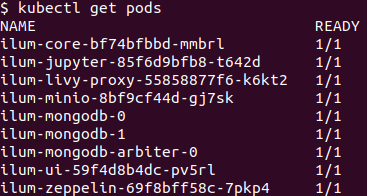

kubectl get pods

Jupyter

Let's start with:

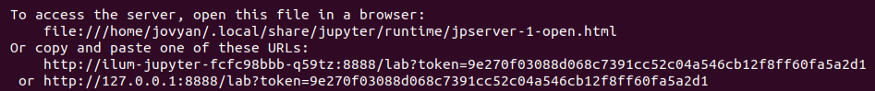

kubectl port-forward svc/ilum-jupyter 8888:8888Ilum uses Sparkmagic to work with Apache Spark in Jupyter notebooks. By default, Jupyter (with the Sparkmagic lib installed) will run on port 8888. To access the server, open your browser and go to localhost:8888. You will need to log in with a password from the logs, or you can copy and paste the whole URL with "localhost" substituted for the domain.

kubectl logs ilum-jupyter-85f6d9bfb8-t642d

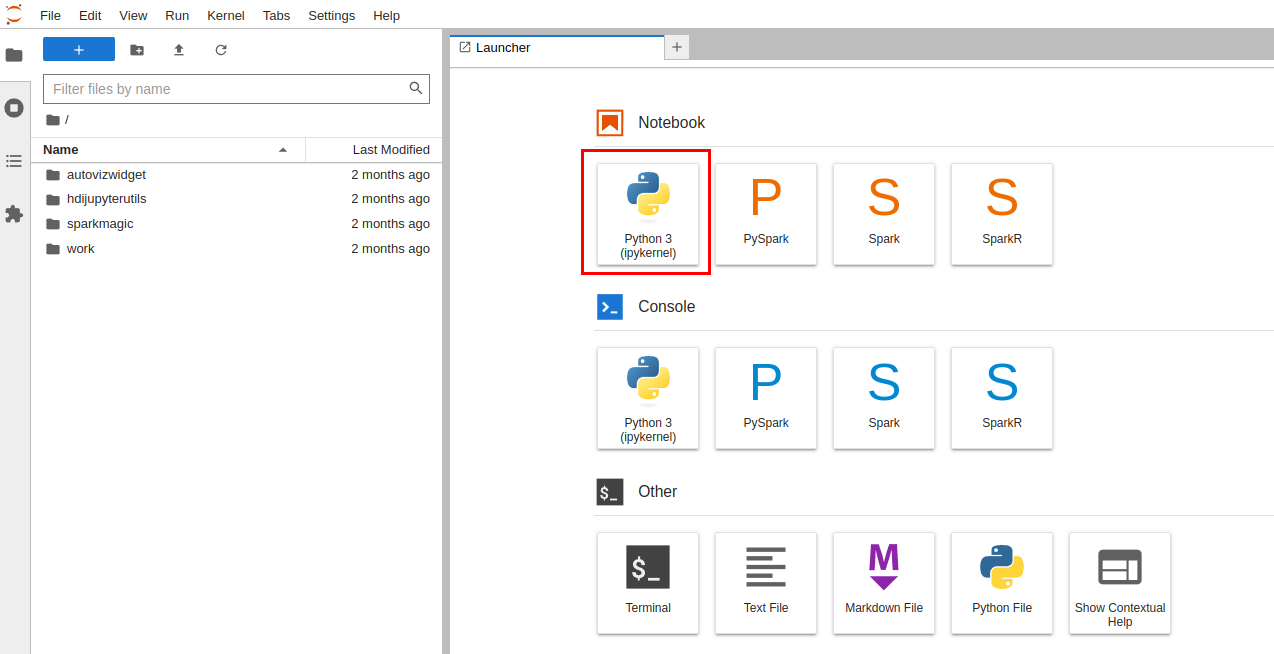

Once the Jupyter is open, we have to launch the Python3 notebook:

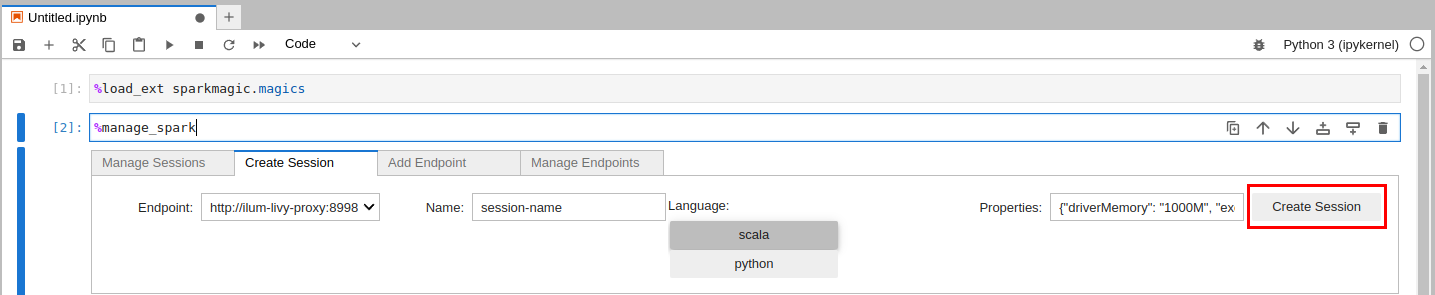

Let's now run the following commands to load spark magic and set up an endpoint.

1. First, we'll need to load the spark magic extension. You can do this by running the following command:

%load_ext sparkmagic.magics2. Next, we'll need to set up an endpoint. An endpoint is simply a URL that points to a specific Spark cluster. You can do this by running the following command:

%manage_spark

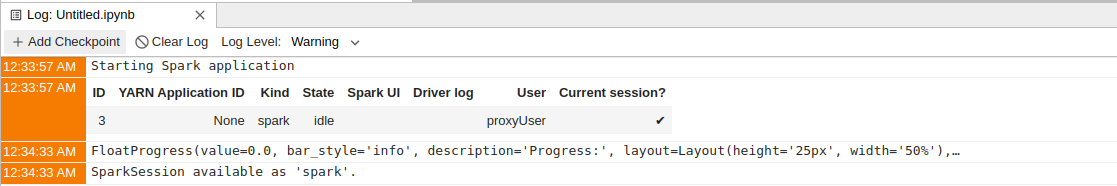

Bundled Jupyter is ready to work out of the box and has a predefined endpoint address, which points to livy-proxy. What you have to do is choose this endpoint from the dropdown list and click the create session button. As simple as that. Now Jupyter will connect with ilum-core via ilum-livy-proxy for creating a spark session. It could take several minutes when the spark container will be up and running. Once is ready, you receive information that a spark session is available.

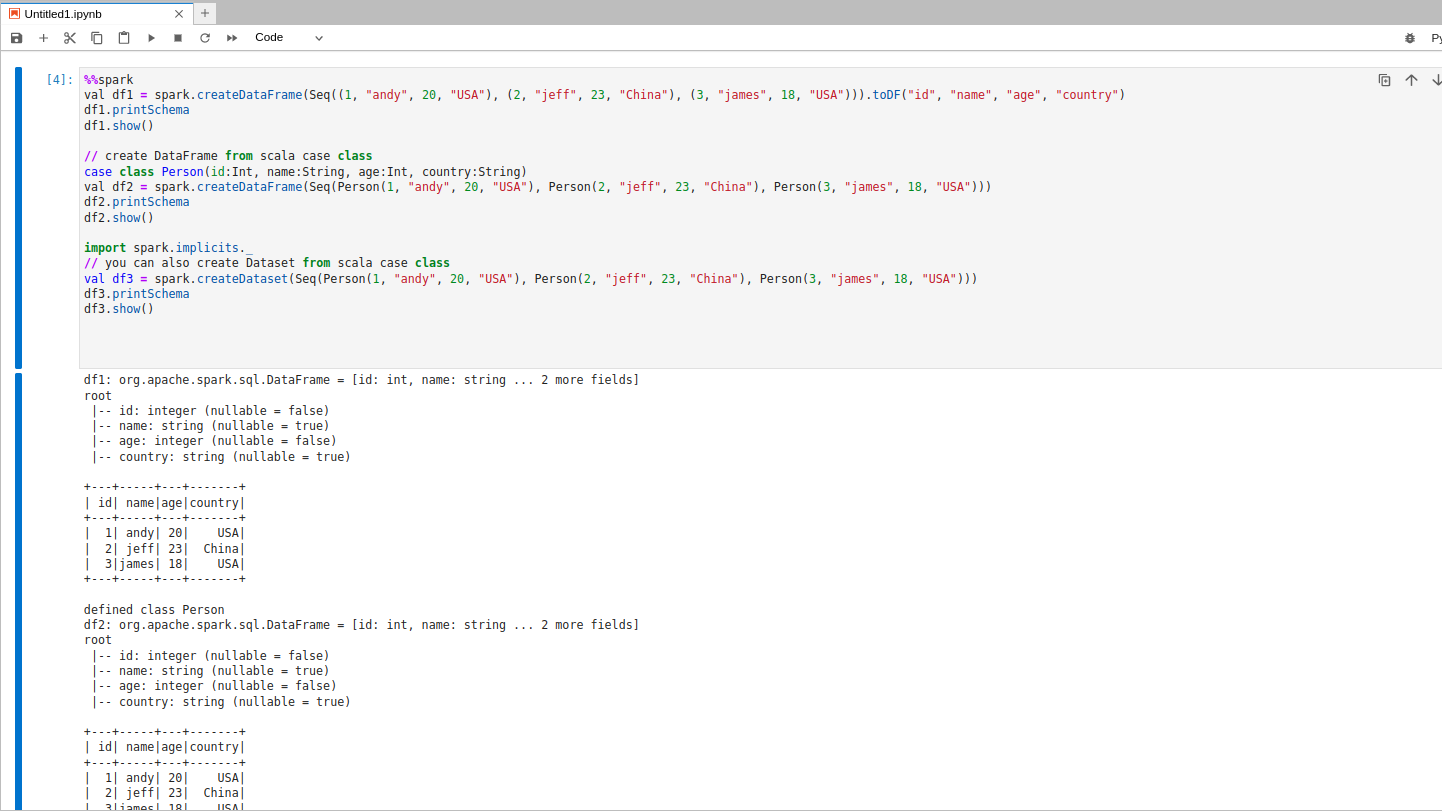

You can now use your Ilum session to run Spark code against it. The name that you assigned to the session will be used in the %%spark magic to run the code.

Apache Zeppelin

Let's start with:

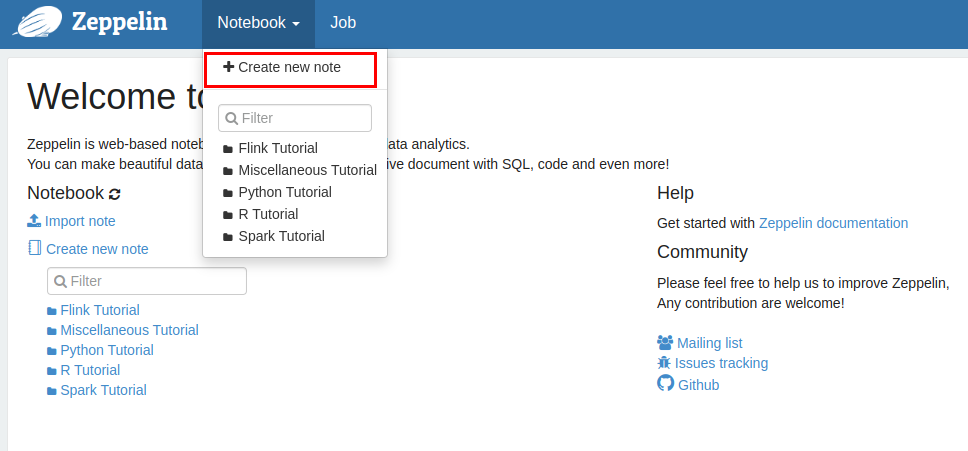

kubectl port-forward svc/ilum-zeppelin 8080:8080Similar to Jupyter, we also bundled Zeppelin notebook with Ilum. Please be aware that the time for container creation could be extended, because of the increased image size. After the container is created and running, you will then be able to access the Zeppelin notebook in your browser at http://localhost:8080.

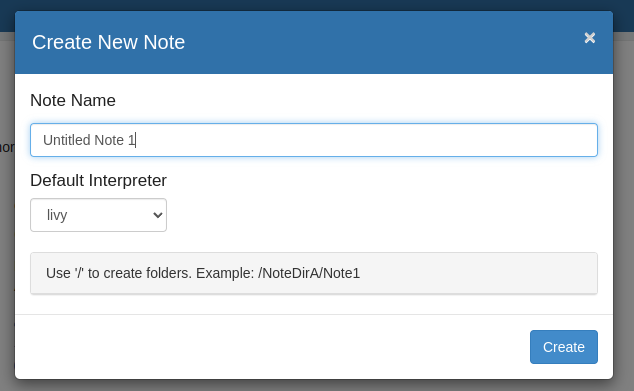

- To execute code, we need to create a note:

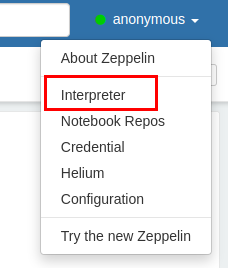

2. As the communication with Ilum is handled via livy-proxy, we need to choose livy as a default interpreter.

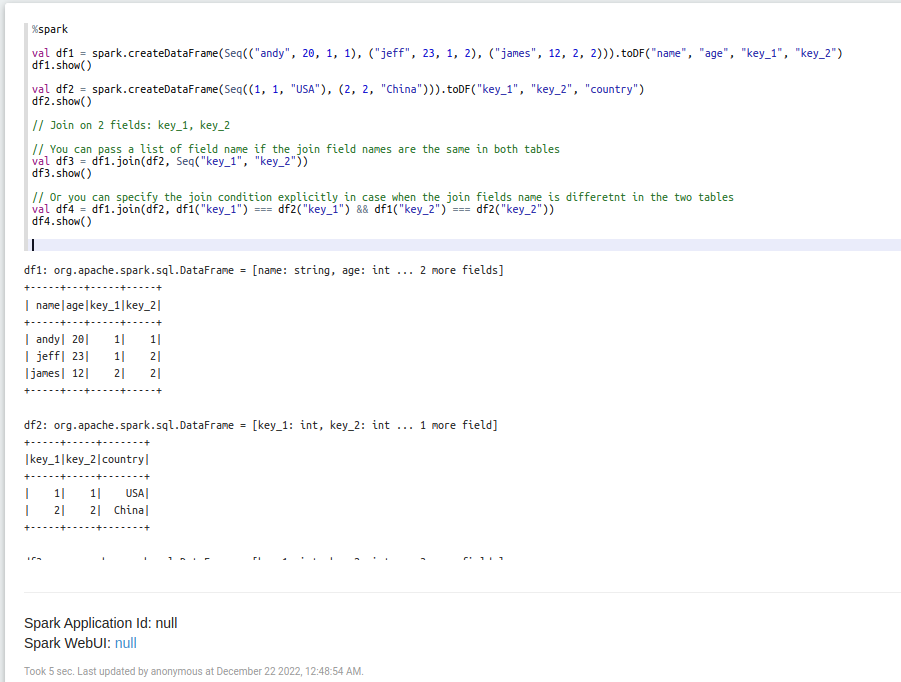

3. Now let’s open the note and put some code into the paragraph:

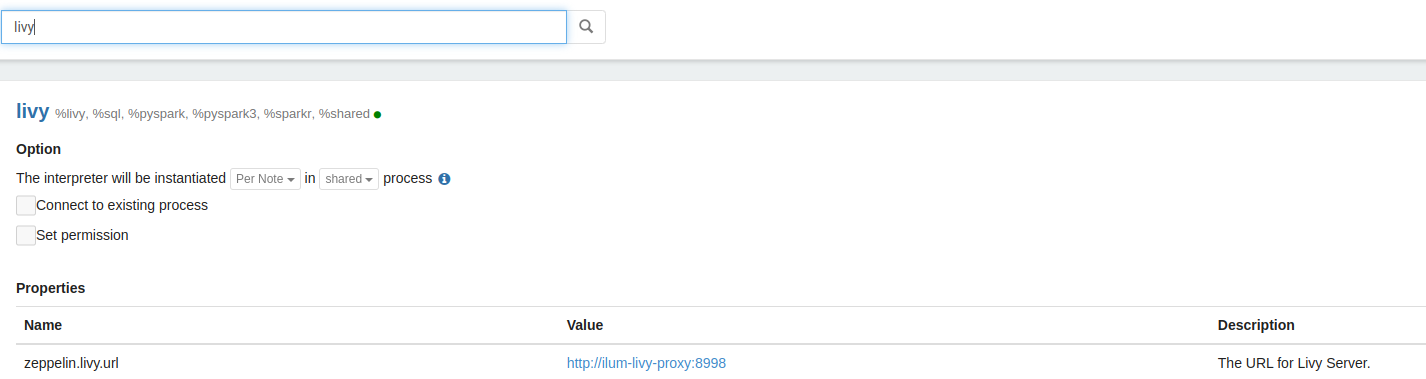

Same to Jupyter, Zeppelin has also a predefined configuration that is needed for Ilum. You can customize the settings easily. Just open the context menu in the top right corner and click the interpreter button.

There is a long list of interpreters and their properties that could be customized.

Zeppelin provides 3 different modes to run the interpreter process: shared, scoped, and isolated. You can learn more about the interpreter binding mode here.

Jupyter and Zeppelin are two of the most popular tools for data science, and they are now available on k8s. This means that data scientists can now use Kubernetes to manage and deploy their data science applications.

Ilum offers a few advantages for data scientists who use Jupyter and Zeppelin. First, Ilum can provide a managed environment for Jupyter and Zeppelin. This means that data scientists don't have to worry about managing their own Kubernetes cluster. Second, Ilum offers an easy way to get started with data science on Kubernetes. With Ilum, data scientists can simply launch a Jupyter or Zeppelin instance and begin exploring their data.

So if you're a data scientist who wants to get started with data science on Kubernetes, check out Ilum. With Ilum, you can get started quickly and easily, without having to worry about managing your own kubernetes cluster.

Overall, data science on Kubernetes can be a great way to improve one's workflow and allow for more collaboration. However, it is important to get started with a basic understanding of the system and how it works before diving in too deep. With that said, Jupyter and Zeppelin are two great tools to help with getting started with Data Science on Kubernetes.

Is Kubernetes Really Necessary for Data Science?

Kubernetes is an open-source system for automating the deployment, scaling, and management of containerized applications. It groups containers that make up an application into logical units for easy management and discovery.

Data science is a process of extracting knowledge or insights from data in various forms, either structured or unstructured, which can be used to make decisions or predictions.

So, is Kubernetes really necessary for data science? The answer is yes and no. While k8s can help automate the deployment and management of data science applications, it is not strictly necessary. Data scientists can still use other methods to deploy and manage their applications. However, Kubernetes can make their life easier by providing a unified platform for managing multiple data science applications.